Research

Reality Deck: Visual Analytics Take Center Stage at White House Data to Knowledge to Action Conference

Collaborators and Researchers:

Arie Kaufman (SBU), Klaus Mueller (SBU), Hong Qin (SBU), Dimitris Samaras (SBU), Amitabh Varshney (UMD), Kaloian Petkov (SBU), Charilaos Papadopoulos (SBU), Ken Gladky (SBU)

The Reality Deck gives scientists, researchers, government officials, and corporate leaders the ability to naturally interact with big data in the world’s largest resolution immersive display.

The Reality Deck, completed through a National Science Foundation grant with matching funds from Stony Brook University, resides within the University’s Center of Excellence in Wireless & Information Technology (CEWIT). Consisting of 416 27” LCD displays in a vast 33’ by 19’ by 11’ high workspace, the Reality Deck offers an aggregate resolution of more than 1.5 billion pixels. Engulfed by data, researchers have the opportunity to engage with information on a gigantic scale. In terms of acuity, Reality Deck is a dream come true. It pushes the limits of the human eye and provides a panoramic, exploratory experience.

Into the Future with Visual Analytics

The facility is heavily used for a range of applications from cosmology through weather prediction to firefighting, medical diagnosis, reconnaissance, flood mapping, drug design and clean room simulation. The number of applications is growing all the time because data is like geometry; when you can look at it from any angle it is easy to integrate and analyze. Currently traditional user interfaces (keyboards/computer mouse/joypads) are used to control the Reality Deck but research scientists are developing natural user interfaces using gestures. For more information, visit www.realitydeck.org

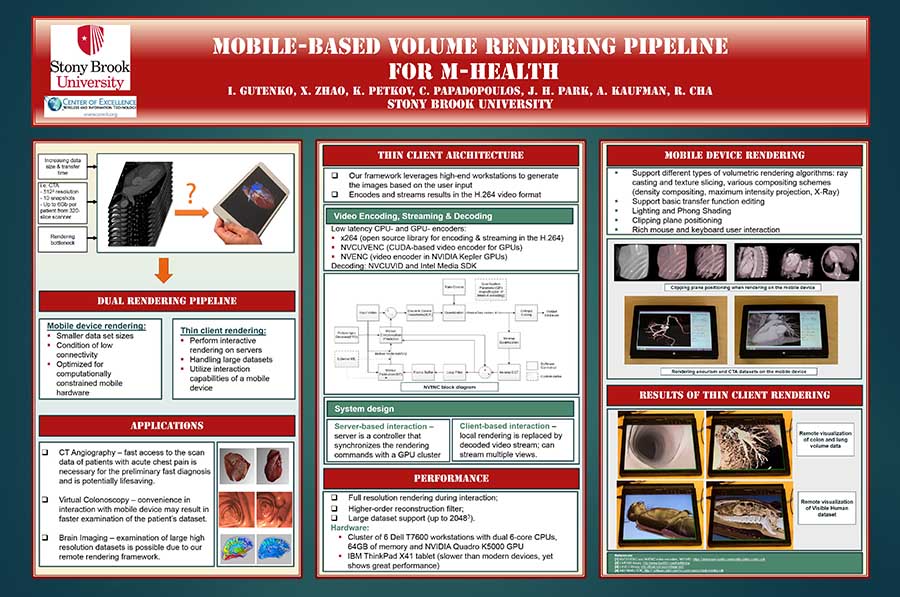

Mobile-based Volume Rendering Pipeline for m-Health

Collaborators and Researchers:

Ievgenia Gutenko (SBU), Xin Zhao (SBU), Kaloian Petkov (SBU), Charilaos Papadopoulos (SBU), Ji Hwan Park (SBU), Arie Kaufman (SBU), Ronald Cha (Samsung Research Lab) Based on the growing use of mobile devices as platforms for medical applications, CDDA collaborators identified a need for a double pipeline that combines medical visualization of Computed Tomography (CT) and Magnetic Resonance Imaging (MRI) data. The double pipeline would alleviate constrained rendering resources; improve access to expansive volumetric medical data; and expedite advanced display quality and interaction of a tablet capable of handling large data.

Based on the growing use of mobile devices as platforms for medical applications, CDDA collaborators identified a need for a double pipeline that combines medical visualization of Computed Tomography (CT) and Magnetic Resonance Imaging (MRI) data. The double pipeline would alleviate constrained rendering resources; improve access to expansive volumetric medical data; and expedite advanced display quality and interaction of a tablet capable of handling large data.

Two main architectures for volumetric rendering of medical data were the focus of this research: 1) rendering of the data fully on a mobile device, and 2) thin-client architecture, where the entire data resides on a remote server and the image is rendered on it and then streamed to the mobile device. As mobile devices have been establishing new ways of interaction, researchers explore and develop 3D User Interfaces for interacting with the volume rendered visualization including touch-based interaction. This project encompasses development of an implementation scheme, compares results of two volumetric rendering approaches on a mobile device, and demonstrates the application of work to CTA visualization on a commodity tablet device.

Read a recent paper about this research by clicking: Full CDDA paper